There could be a very serious problem with the past 15 years of research into human brain activity, with a new study suggesting that a bug in fMRI software could invalidate the results of some 40,000 papers.

That's massive, because functional magnetic resonance imaging (fMRI) is one of the best tools we have to measure brain activity, and if it's flawed, it means all those conclusions about what our brains look like during things like exercise, gaming, love, and drug addiction are wrong.

"Despite the popularity of fMRI as a tool for studying brain function, the statistical methods used have rarely been validated using real data," researchers led by Anders Eklund from Linköping University in Sweden assert.

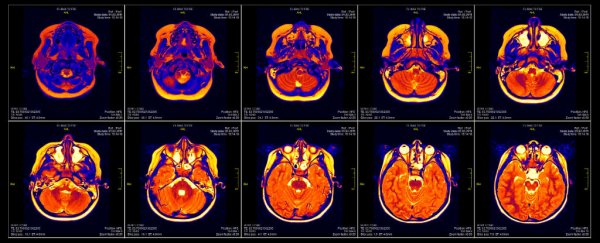

The main problem here is in how scientists use fMRI scans to find sparks of activity in certain regions of the brain. During an experiment, a participant will be asked to perform a certain task, while a massive magnetic field pulsates through their body, picking up tiny changes in the blood flow of the brain.

These tiny changes can signal to scientists that certain regions of the brain have suddenly kicked into gear, such as the insular cortex region during gaming, which has been linked to 'higher' cognitive functions such as language processing, empathy, and compassion.

Getting high on mushrooms while connected to an fMRI machine has shown evidence of cross-brain activity - new and heightened connections across sections that wouldn't normally communicate with each other.

It's fascinating stuff, but the fact is that when scientists are interpreting data from an fMRI machine, they're not looking at the actual brain. As Richard Chirgwin reports for The Register, what they're looking at is an image of the brain divided into tiny 'voxels', then interpreted by a computer program.

"Software, rather than humans … scans the voxels looking for clusters," says Chirgwin. "When you see a claim that 'Scientists know when you're about to move an arm: these images prove it,' they're interpreting what they're told by the statistical software."

To test how good this software actually is, Eklund and his team gathered resting-state fMRI data from 499 healthy people sourced from databases around the world, split them up into groups of 20, and measured them against each other to get 3 million random comparisons.

They tested the three most popular fMRI software packages for fMRI analysis - SPM, FSL, and AFNI - and while they shouldn't have found much difference across the groups, the software resulted in false-positive rates of up to 70 percent.

And that's a problem, because as Kate Lunau at Motherboard points out, not only did the team expect to see an average false positive rate of just 5 percent, it also suggests that some results were so inaccurate, they could be indicating brain activity where there was none.

"These results question the validity of some 40,000 fMRI studies and may have a large impact on the interpretation of neuroimaging results," the team writes in PNAS.

The bad news here is that one of the bugs the team identified has been in the system for the past 15 years, which explains why so many papers could now be affected.

The bug was corrected in May 2015, at the time the researchers started writing up their paper, but the fact that it remained undetected for over a decade shows just how easy it was for something like this to happen, because researchers just haven't had reliable methods for validating fMRI results.

Since fMRI machines became available in the early '90s, neuroscientists and psychologists have been faced with a whole lot of challenges when it comes to validating their results.

One of the biggest obstacles has been the astronomical cost of using these machines - around US$600 per hour - which means studies have been limited to very small sample sizes of up to 30 or so participants, and very few organisations have the funds to run repeat experiments to see if they can replicate the results.

The other issue is that because software is the thing that's actually interpreting the data from the fMRI scans, your results are only as good as your computer, and programs used to validate the results have been prohibitively slow.

But the good news is we've come a long way, and Eklund points to the fact that fMRI results are now being made freely available online for researchers to use, so they don't have to keep paying for fMRI time to record new results, and our validation technology is finally up to snuff.

"It could have taken a single computer maybe 10 or 15 years to run this analysis," Eklund told Motherboard. "But today, it's possible to use a graphics card", to lower the processing time "from 10 years to 20 days".

So going forward, things are looking much more positive, but what of those 40,000 papers that could now be in question?

Just as we found out last year that when researchers tried to replicate the results of 100 psychology studies, more than half of them failed, we're seeing more and more evidence that science is going through a bit of a 'replication crisis' right now, and it's time we addressed it.

Unfortunately, running someone else's experiment for the second, third, or fourth time isn't nearly as exciting as running your own experiment for the first time, but studies like this are showing us why we can no longer avoid it.