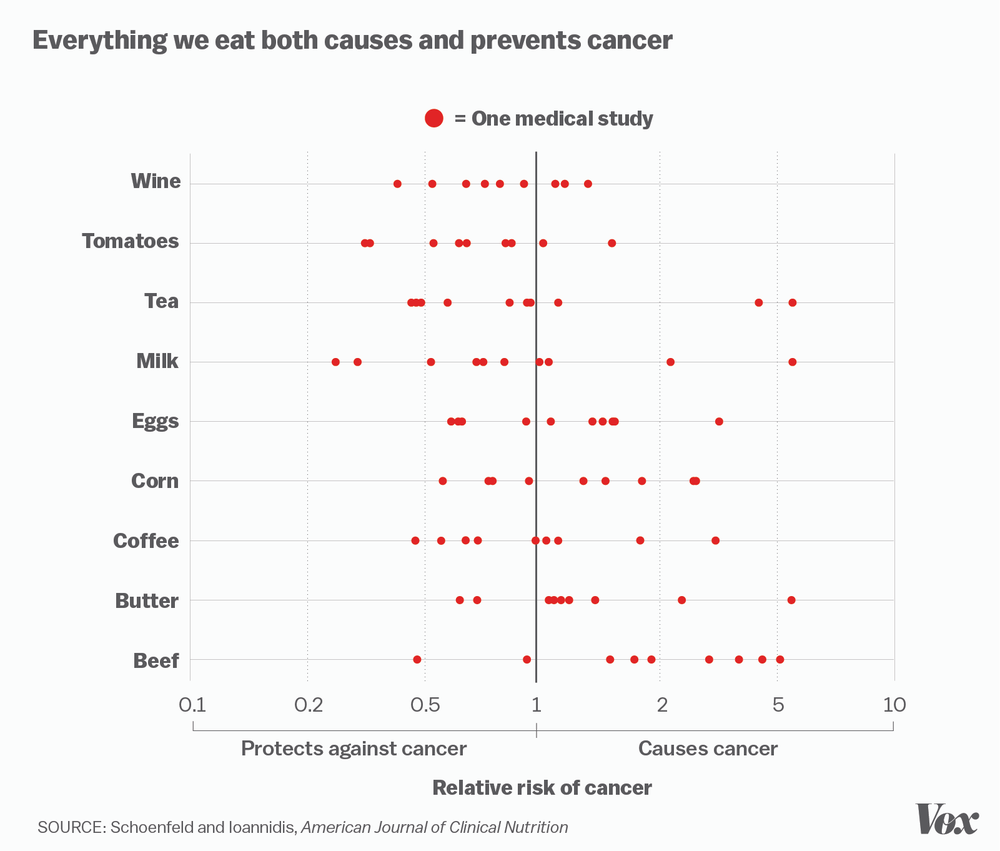

In an effort to illustrate how often medical studies can be flawed, Vox have put together the above graph showing how, in a single meta-analysis of research into food- cancer risk links, you can get some very different results depending on how you designed your experiment. You can see the full version below.

The image is based on a 2013 paper called "Is everything we eat associated with cancer? A systematic cookbook review", for which the researchers randomly selected 50 ingredients from a cookbook and looked into recent studies that evaluated the relation of each to cancer risk.

Reporting in The American Journal of Clinical Nutrition, they found that 40 of the ingredients had articles reporting on their cancer risk, and of 264 single-study assessments they looked at, 191 (72 percent) concluded that the tested food was associated with an increased or decreased risk. "Thirty-nine percent of studies concluded that the studied ingredient conferred an increased risk of malignancy; 33 percent concluded that there was a decreased risk, 5 percent concluded that there was a borderline statistically significant effect, and 23 percent concluded that there was no evidence of a clearly increased or decreased risk," the team concludes.

If none of that sounds quite right - it shouldn't. As Julia Belluz says over at Vox, "More often than not, single studies contradict one another - such as the research on foods that cause or prevent cancer. The truth can be found somewhere in the totality of the research."

Head to Vox to read the whole article, it's a fascinating look into the very difficult world of medical science research and reporting. Only by identifying and dicussing these crucial limitations will will be able to figure out how to get past them.

Source: Vox