Self-driving cars are almost here, but one big question remains - how do they make hard choices in a life and death situation? Now researchers have demonstrated that smart vehicles are capable of making ethical decisions on the road, just like we do everyday.

By studying human behaviour in a series of virtual reality-based trials, the team were able to describe moral decision making in the form of an algorithm. This is huge because previously, researchers have assumed that modelling complex ethical choices is out of reach.

"But we found quite the opposite," says Leon Sütfeld, one of the researchers from the University of Osnabruck, Germany. "Human behaviour in dilemma situations can be modelled by a rather simple value-of-life-based model that is attributed by the participant to every human, animal, or inanimate object."

If you take a quick glance at the statistics, humans can be pretty terrible drivers that are often prone to distraction, road rage and drink driving. It's no surprise then that there are almost 1.3 million deaths on the road worldwide each year, with 93 percent of accidents in the US caused by human error.

But is kicking back in the seat of a self-driving car really a safer option? The outlook is promising. One report estimates that driverless vehicles could reduce the number of road deaths by 90 percent, which works out to be around 300,000 saved lives a decade in the US alone.

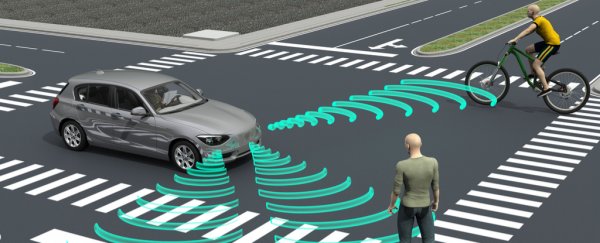

Despite the glowing figures, developing a self-driving car that can respond to unpredictable situations on the road hasn't been a smooth ride. One stumbling block is figuring out how these smart cars will deal with road dilemmas that require ethical decision-making and moral judgement.

While previous research has shown that self-driving cars can avoid accidents by driving slow at all times or switching to a different driving style, following a set of programmed 'rules' isn't enough to survive the demands of inner city traffic.

And then there's the 'trolley dilemma', a thought experiment that tests how we make moral decisions. In this no-win scenario, a trolley is headed towards five unsuspecting bystanders. The only way to save these five people is by pulling a lever that diverts the trolley onto another track where it kills one person instead. How can a driverless car make the best choice?

The tricky thing with these kinds of decisions is that we tend to make a choice based on the context of the situation, which is difficult to mirror in the form of an algorithm programmed into a machine.

With this in mind, Sütfeld and the team took a closer look at whether modelling human behaviour when driving is as impossible as everyone thinks it is.

Using virtual reality to simulate a foggy road in a suburban setting, the team placed a group of participants in the driver's seat in a car on a two-lane road. A variety of paired obstacles, such as humans, animals and objects, appeared on the virtual road. In each scenario, the participants were forced to decide which obstacle to save and which to run over.

Next, the researchers used these results to test three different models predicting decision making. The first predicted that moral decisions could be explained by a simple value-of-life model, a statistical term measuring the benefits of preventing a death.

The second model assumed that the characteristics of each obstacle, such as the age of a person, played a role in the decision making process. Lastly, the third model predicted that the participants were less likely to make an ethical choice when they had to respond quickly.

After comparing the results of the analysis, the team found the first model most accurately described the ethical choices of the participants. This means that self-driving cars and other automated machines can make human-like moral choices using a relatively simple algorithm.

But before you throw away your driver's license, it's important to remember that these findings open up a whole new realm of ethical and moral debate that need to be considered before self-driving cars are out on the road.

"Now that we know how to implement human ethical decisions into machines we, as a society, are still left with a double dilemma," says Peter Konig, one of the researchers on the study "Firstly, we have to decide whether moral values should be included in guidelines for machine behaviour, and if they are, should machines act just like humans?"

While we still have a way to go before we take our hands off the steering wheel for good, these findings are a big leap forward in the world of intelligent machines.

The research was published in Frontiers in Behavioral Neuroscience.