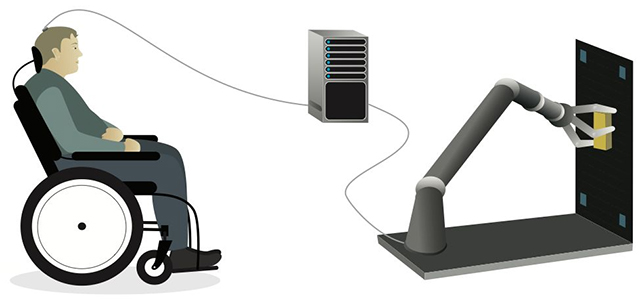

A newly developed system combining AI and robotics has been helping a man with tetraplegia turn his thoughts into mechanical arm movements – including clutching and releasing objects – with the system working for seven months without major readjustment.

That's way beyond the handful of days that these setups typically last for before they have to be recalibrated – which shows the promise and potential of the tech, according to the University of California, San Francisco (UCSF) research team.

Crucial to the brain-computer interface (BCI) system are the AI algorithms used to match specific brain signals to specific movements. The man was able to watch the robot arm movements in real time while imagining them, which meant errors could be quickly rectified, and greater accuracy could be achieved with the robotic actions.

"This blending of learning between humans and AI is the next phase for these brain-computer interfaces," says neurologist Karunesh Ganguly, from UCSF. "It's what we need to achieve sophisticated, life-like function."

Guiding the robotic arm through thoughts alone, the man could open a cupboard, take out a cup, and place it under a drink dispenser. The tech has great potential to support people with disability in a wide variety of actions.

Among the discoveries made during the course of the research, the team found that the shape of the brain patterns related to movement stayed the same, but their location drifted slightly over time – something that's thought to happen as the brain learns and takes on new information.

The AI was able to account for this drift, which meant the system didn't need frequent recalibration. What's more, the researchers are confident the speed and the accuracy of the setup can be improved over time.

"Notably, the neuroprosthetic here was completely under volitional control with no machine assistance," write the researchers in their published paper.

"We anticipate that vision-based assist can lead to remarkable improvements in performance, particularly for complex object interactions."

This isn't a simple or a cheap system to set up, using brain implants and a technique known as electrocorticography (ECoG) to read brain activity, and a computer that can translate that activity and turn it into mechanical arm movements.

However, it's evidence that we now have the technology to see which neural patterns are linked to thoughts about which physical actions – and that those patterns can be tracked even as they move around in the brain.

We've also seen similar systems give voices to those who can no longer speak, and help a man with tetraplegia play games of chess. There's still a lot more work to do, but as the technology keeps on improving, more complex actions will become possible.

"I'm very confident that we've learned how to build the system now, and that we can make this work," says Ganguly.

The research has been published in Cell.