If you think your mind is the only safe place left for all your secrets, think again, because scientists are making real steps towards reading your thoughts and putting them on a screen for everyone to see.

A team from the University of Oregon has built a system that can read people's thoughts via brain scans, and reconstruct the faces they were visualising in their heads. As you'll soon see, the results were pretty damn creepy.

"We can take someone's memory - which is typically something internal and private - and we can pull it out from their brains," one of the team, neuroscientist Brice Kuhl, told Brian Resnick at Vox.

Here's how it works. The researchers selected 23 volunteers, and compiled a set of 1,000 colour photos of random people's faces. The volunteers were shown these pictures while hooked up to an fMRI machine, which detects subtle changes in the blood flow of the brain to measure their neurological activity.

Also hooked up to the fMRI machine is an artificial intelligence program that reads the brain activity of the participants, while taking in a mathematical description of each face they were exposed to in real time. The researchers assigned 300 numbers to certain physical features on the faces to help the AI 'see' them as code.

Basically, this first phase was a training session for the AI - it needed to learn how certain bursts of neurological activity correlated to certain physical features on the faces.

Once the AI had formed enough brain activity-face code match-ups, the team started phase two of the experiment. This time, the AI was hooked up to the fMRI machine only, and had to figure out what the faces looked like based only on the participants' brain activity.

All the faces shown to the participants in this round were completely different from the previous round.

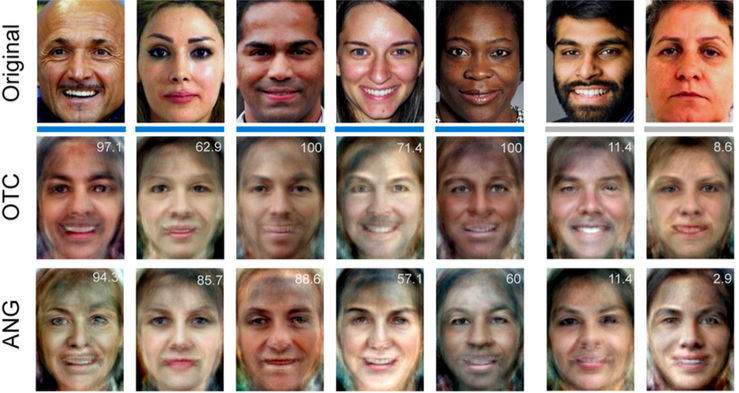

The machine managed to reconstruct each face based on activity from two separate regions in the brain: the angular gyrus (ANG), which is involved in a number of processes related to language, number processing, spatial awareness, and the formation of vivid memories; and the occipitotemporal cortex (OTC), which processes visual cues.

You can see the (really strange) results below:

The Journal of Neuroscience

The Journal of Neuroscience

So, um, yep, we're not going to be strapping down criminals and drawing perfect reconstructions of a crime scene based on their memories, or using the memories of victims to construct mug shots of criminals, any time soon.

But the researchers proved something very important: as Resnick reports for Vox, when they showed the strange reconstructions to another set of participants, they could correctly answer questions that described the original faces seen by the group hooked up to the fMRI machine.

"[The researchers] showed these reconstructed images to a separate group of online survey respondents and asked simple questions like, 'Is this male or female?' 'Is this person happy or sad?' and 'Is their skin colour light or dark?'

To a degree greater than chance, the responses checked out. These basic details of the faces can be gleaned from mind reading."

So one set of people could read the thoughts of another set of people - to a point - via a machine.

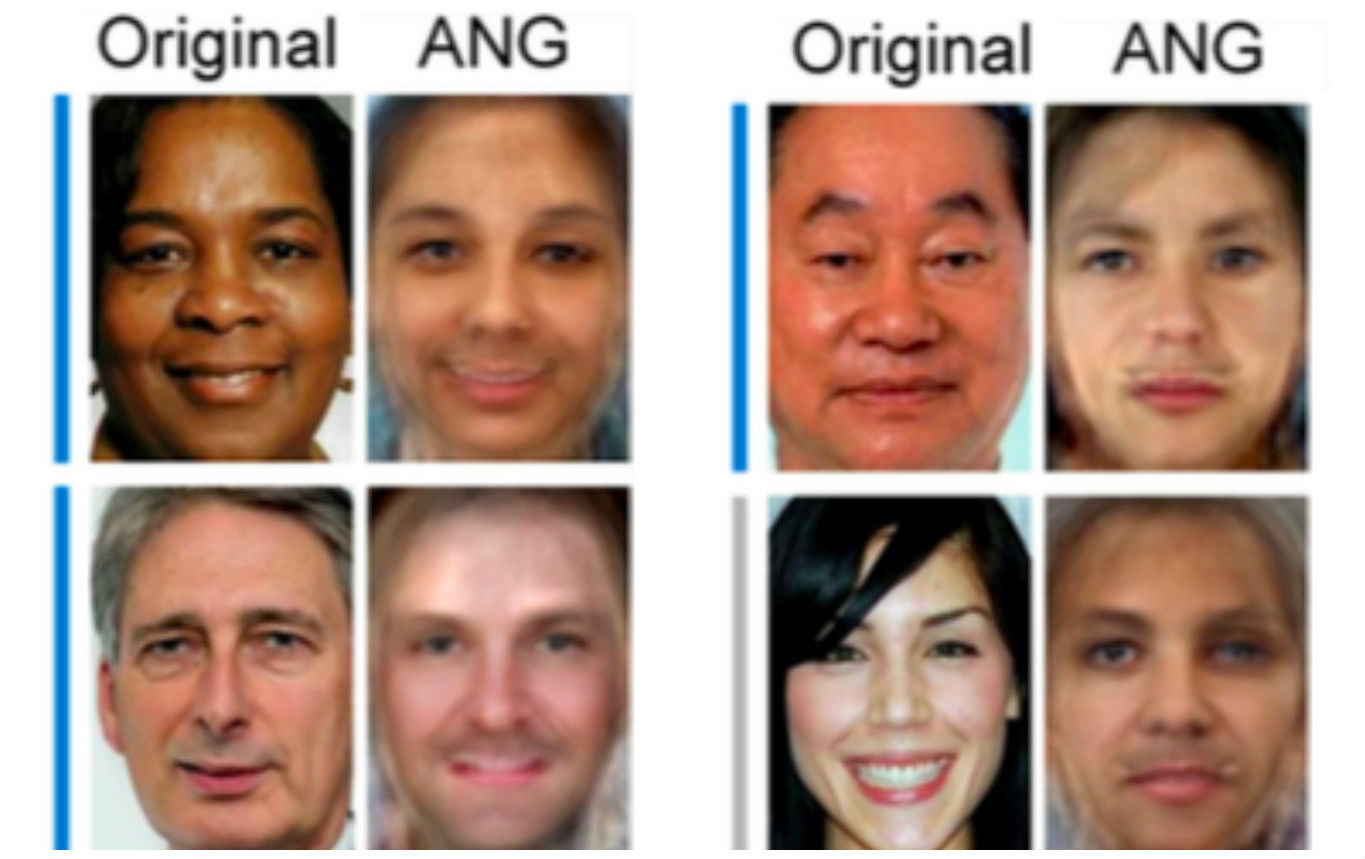

The team is now working on an even tougher task - getting their participants to see a face, hold it in their memory, and then get the AI to reconstruct it based on the person's memory of what the face looked like.

As you can imagine, this is SUPER hard to do, and the results make that pretty obvious:

The Journal of Neuroscience

The Journal of Neuroscience

It's janky as hell, but there's potential here, and that's pretty freaking cool, especially when we consider just how fast technology like this can advance given the right resources.

Maybe one day we'll be able cut out the middle man and send pictures - not just words - directly to each other using just our thoughts… No, argh, stop sending me telepathy porn, damn it.

The study has been published in The Journal of Neuroscience.