Amidst the excitement surrounding ChatGPT and the impressive power and potential of artificial intelligence (AI), the impact on the environment has been somewhat overlooked.

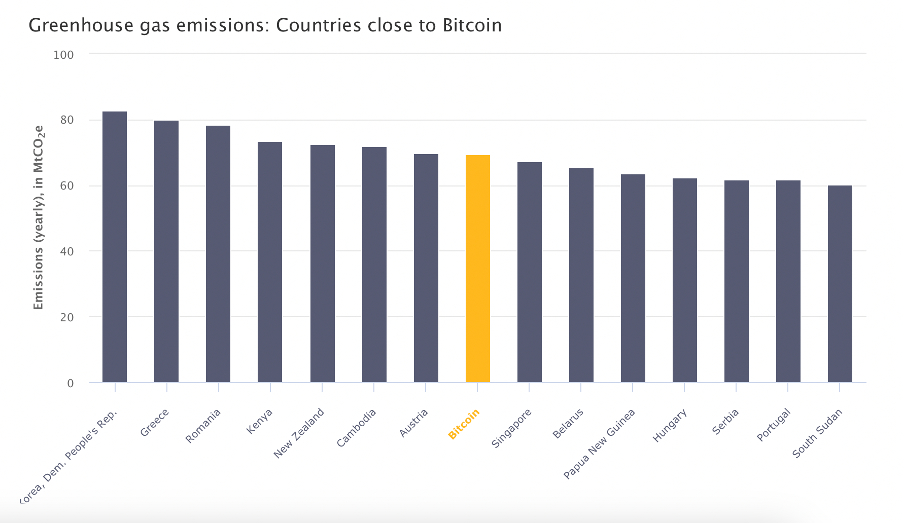

Analysts predict that AI's carbon footprint could be as bad – if not worse – than bitcoin mining, which currently generates more greenhouse gases than entire countries.

Record-shattering heat across land, sky, and seas suggests this is the last thing our fragile life support systems need.

Currently, the entire IT industry is responsible for around 2 percent of global CO2 emissions. If the AI industry continues along its current trajectory, it will consume 3.5 percent of global electricity by 2030, predicts consulting firm Gartner.

"Fundamentally speaking, if you do want to save the planet with AI, you have to consider also the environmental footprint," Sasha Luccioni, an ethics researcher at the open-source machine learning platform Hugging Face, told The Guardian.

"It doesn't make sense to burn a forest and then use AI to track deforestation."

Open.AI spends an estimated US$700,000 per day on computing costs alone in order to deliver its chatbot service to more than 100 million users worldwide.

The popularity of Microsoft-backed ChatGPT has set off an arms race between the tech giants, with Google and Amazon quickly deploying resources to generate natural language processing systems of their own.

Many companies have banned the use of ChatGPT but are developing their own AI in-house.

Like cryptocurrency mining, AI depends on high-powered graphics processing units to crunch data. ChatGPT is powered by gigantic data centers using tens of thousands of these energy-hungry computer chips.

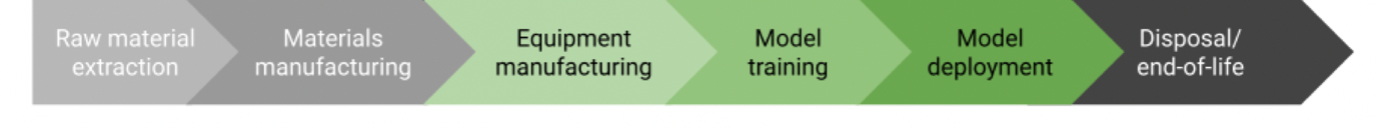

The total environmental impact of ChatGPT and other AI systems is complex to calculate, and much of the information required to do so is not available to researchers.

"Obviously these companies don't like to disclose what model they are using and how much carbon it emits," computer scientist Roy Schwartz from the Hebrew University of Jerusalem told Bloomberg.

It's also hard to predict exactly how much AI will scale up over the next few years, or how energy-efficient it will become.

Researchers have estimated that training GPT-3, the predecessor of ChatGPT, on a database of more than 500 billion words would have taken 1,287 megawatt hours of electricity and 10,000 computer chips.

The same amount of energy would power around 121 homes for a year in the United States.

This training process would have produced around 550 tonnes of carbon dioxide, which is equivalent to flying from Australia to the UK 33 times.

GPT-4, the version released in July, was trained on 570 times more parameters than GPT-3, suggesting it might use more energy than its predecessors.

Another language model called BLOOM was found to consume 433 megawatt hours of electricity when it was trained on 1.6 terabytes of data.

If the growth of the AI sector is anything like cryptocurrency, it's only going to become more energy-intensive over time.

Bitcoin now consumes 66 times more energy than it did in 2015, so much energy that China and New York have banned cryptocurrency mining.

Computers must complete lengthy calculations to mine crypto, and it can take up to a month to earn a single bitcoin.

Bitcoin mining burns through 137 million megawatt hours a year of electricity, with a carbon footprint that is almost as large as New Zealand.

Innovation and protecting Earth's limited resources require a careful balancing act.