Entropy is one of those fearsomely deep concepts that form the core of entire fields of physics (in this case, thermodynamics) that is unfortunately so mathematical that it's difficult to explain in plain language. But we will give it a try.

Whenever I see the word entropy, I like to replace it with the phrase "counting the number of ways that I can rearrange a scenario while leaving it largely the same." That's a bit of a mouthful, I agree, and so entropy will have to do.

You get up on weekend morning and decide to finally tackle the monumental task of cleaning your bedroom.

You pick up, clean, fold, and put away your clothes. You straighten your sheets. You fluff your pillows. You organize your underwear drawer.

After hours of effort you stand back to admire your handiwork, but already you can feel the sense of spooling unease in your stomach. Before long, you just know it will be messy again.

You instinctively know this because there is but one singular way to have a perfectly orderly room, with a place for everything and everything in its place.

There is only one way to have this precise scenario. Since you're now familiar with the word, you can feel free to use it: you might say that a perfectly clean room has very low entropy.

Let's introduce some disorder. You take one unpaired sock and toss it in your room. It is now messy.

And you can place a quantifiable measure on this messiness. Your lonely sock can be on the floor. It can be on the bed. It can be sticking half out of a drawer.

There are a number of ways that you can rearrange this scenario – the appearance of one untidy sock in your room – while keeping the general picture the same. The entropy is higher.

And then your dog, or your kids, or your dog and kids come crashing into the room. Chaos ensues.

Nothing is where it's supposed to be, and there are almost an infinite number of ways to achieve the same level of disarray.

The entropy – and the frustration – is very high indeed.

Physicists like using entropy because it also serves as a handy way to encode the information in a system.

So by measuring the entropy – a quantity physicists are very comfortable with handling – they can also get a grip on the amount of information in a system.

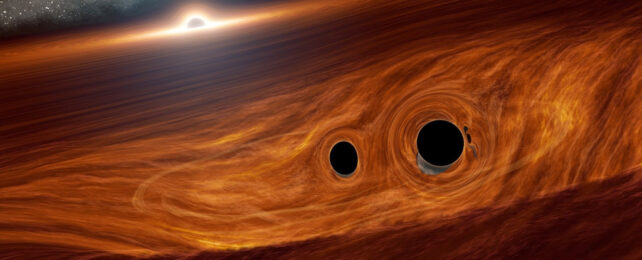

This applies to any system in the universe, like black holes.

Beginning in 1981 physicist Jacob Bekenstein – whose work came just shy of making us refer to Bekenstein radiation – discovered two remarkable, unintuitive facts about black holes and their event horizons.

One, the volume contained within black holes represents the absolute greatest amount of entropy that any similarly sized volume in the universe can have.

Put another way, black holes are spheres of maximum entropy. Let that sink in.

No matter how messy your room gets, no matter how much you deliberately or inadvertently increase its entropy, you can never, ever beat the entropy of a room-sized black hole. That fact should immediately raise some troubling but intriguing questions.

Of all of the wonderful creations in the universe, why did nature choose black holes to contain the most entropy? Is this a mere coincidence, or is this teaching us something valuable about the connection between quantum mechanics, gravity, and information?

That sense of twinned unease and excitement should rachet up when you learn the second fact about black holes that Bekenstein discovered. When you add information to a black hole, it gets larger.

That in and of itself is not surprising, but black holes – and only black holes – grow in such a way that their surface areas, not their volumes, grow in proportion to the amount of new information passing into them.

If you take any other system in the universe – a star consuming a planet, you consuming a cheeseburger – the entropy and the information of the combined system go up. And so does the volume (both for the star and for you).

And the volume goes up proportionally to the amount of information increase.

But black holes, for some reason that we still do not yet fathom, defy this common-sense intuitive picture.

Paul M. Sutter is a theoretical cosmologist and award-winning science communicator. He's a research professor at the Institute for Advanced Computational Science at Stony Brook University and a guest researcher at the Center for Computational Astrophysics with the Flatiron Institute in New York City.

This article was originally published by Universe Today. Read the original article.