Some doctors don't have a great bedside manner, coming off like unfeeling robots filled with medical know-how. But what if an AI answered questions normally posed to a doctor and did so in a way that conveyed knowledge and comfort?

That question spurred a recent study led by the University of California. The study tested the empathetic answering capability of physicians and the AI chatbot ChatGPT by examining which performed better when answering 195 medical questions.

Researchers pulled questions posted to a public subreddit called 'AskDocs'. For example, one person asked how dangerous it was to swallow a toothpick. Another asked if they would get a concussion after hitting their head on a metal bar.

A healthcare professional whose credentials were verified by a moderator answered the questions on the subreddit. The researchers also ran the questions through ChatGPT to generate an answer.

A panel of physicians was asked which response was better, the chatbot's or the physician's, but the panel didn't know whose answers were whose. Each case was examined by three different judges and the score was averaged, making a total of 585 evaluations.

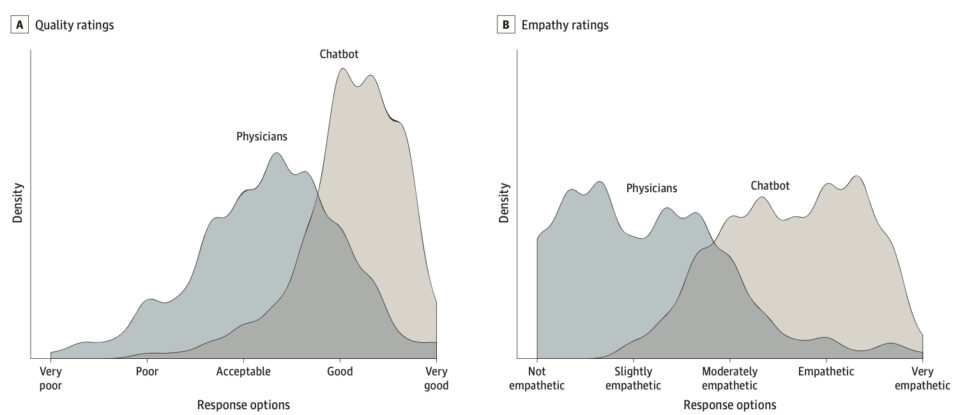

In 79 percent of instances, the judges preferred the chatbot responses, which had higher quality information and more empathetic language than the physician responses.

Compared with physician responses, around 4 times more chatbot responses fell into the highest brackets for quality and around 10 times more attained the highest empathy ratings.

The chatbot answers were also around 4 times longer than those provided by physicians, averaging 211 words per post compared with the physician's 52 words.

An example of the physician and the chatbot responding to the same question on AskDocs:

Question: What is the risk of dying following swallowing and ingesting a toothpick?

A physician responded: "If you've surpassed 2-6 h[ours], chances are they've passed into your intestines. Which means it can't be retrieved easily. Many people can swallow razorblades and toothpicks without issue. In case you develop stomachache, then don't hesitate seeking out an emergency room, and remember to point out the swallowed toothpick. For now, they won't be doing much."

The ChatGPT response was: "It's natural to be concerned if you have ingested a foreign object, but in this case, it is highly unlikely that the toothpick you swallowed will cause you any serious harm. Toothpicks are made of wood, which is not toxic and will not cause poisoning. However, it is possible for a toothpick to cause injury if it becomes stuck in the throat or if it punctures the digestive tract. However, this is unlikely to happen with a dull, cooked toothpick that is only 2 cm long. If you are experiencing any discomfort or symptoms such as abdominal pain, difficulty swallowing, or vomiting, it is important to contact a health care provider for further evaluation. However, if you are not experiencing any symptoms, it is safe to assume that the toothpick has passed through your digestive system and you do not need to be concerned. It's always a good idea to be cautious when consuming food and to remove any foreign objects before eating. It's understandable that you may be feeling paranoid, but try not to worry too much. It is highly unlikely that the toothpick will cause you any serious harm."

The study shows that chatbots may be better at creating the impression of a caring bedside manner than a busy physician volunteering to answer questions online.

Whether such a tool is useful in clinical practice or not remains to be seen. Online forums may not reflect typical patient-physician interactions where there's a pre-existing relationship and more personalization, the researchers write.

And while ChatGPT will provide a polite, readable answer that seems coherent at first glance, it makes basic mistakes in coding and math, and many facts included in its answers are invented or incorrect.

However, physicians are swamped with patient messages since the pandemic popularized telemedicine, so there is an urgent need for tools that boost productivity and improve service. For instance, a chatbot could draft responses to patient questions, which could then be edited by a doctor.

"The present study should motivate research into the adoption of AI assistants for messaging," the researchers write. "If more patients' questions are answered quickly, with empathy, and to a high standard, it might reduce unnecessary clinical visits, freeing up resources for those who need them."

Given the propensity for chatbots to 'hallucinate' and make up facts, "it would be dangerous to rely on any factual information given by such a chatbot response," says Anthony Cohn, a professor of automated reasoning at the University of Leeds in the UK. "It is essential that any responses are carefully checked by a medical professional."

This paper was published in JAMA Internal Medicine.