Computers are getting better and better at recognising objects - we've now taught them to distinguish individual faces, certain objects and even emotions. But how does the world look to a computer?

Researchers from the University of Wyoming and Cornell University in the US decided to test what differences remain between computer vision and human vision, and discovered that, unsurprisingly, technology sees the word very differently to us.

In order to work out how computers see, they worked with an image recognition algorithm called a deep neural network (DNN), which is capable of identifying objects in images with near-human precision.

They then applied this algorithm to a second algorithm, which evolves image. When the second algorithm is used with human judgement, the result is that images become clearer and clearer.

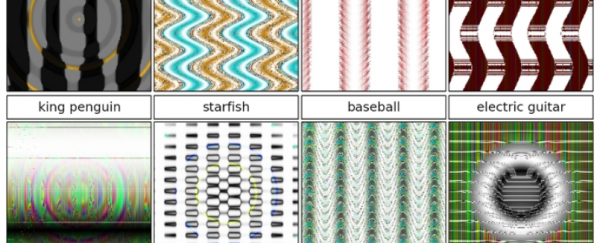

But when they used the second algorithm with a DNN instead, the odd-looking images above appeared. The white-noise-esque set below were also produced as part of the experiment.

To us, they look like a random pattern of colours and shapes, but the computers were able to identify the objects with 99.99 percent certainty. The results are published in Arxiv.org.

"We were expecting that we would get the same thing, a lot of very high-quality recognisable images," Jeff Clune, the lead researcher, told Jacob Aron at New Scientist. "Instead we got these rather bizarre images: a cheetah that looks nothing like a cheetah."

Aron explains what's going on in his article for New Scientist:

"The algorithm's confusion is due to differences in how it sees the world compared with humans, says Clune. While we identify a cheetah by looking for the whole package — the right body shape, patterning and so on — a DNN is only interested in the parts of an object that most distinguish it from others."

In a way, it's almost as though these fuzzy images are like the optical illusions that trick humans into seeing steps that aren't there or identical objects as different sizes.

But although it's funny (and a little bit sad) to think about poor old computers being tricked by a bunch of dots, this poses a pretty serious problem. If we can trick computers into confidently seeing objects and animals with a bunch of fuzzy lines, what's stop someone from using a pattern to hack visual recognition software?

Clune will now be using this research to understand more about how computer vision can be fooled and ways we can stop it.

In the future he's also hoping the research will teach us more about how our own vision works - something that we still don't fully comprehend.

As unrelated researcher Jürgen Schmidhuber from the Dalle Molle Institute for Artificial Intelligence Research in Switzerland told New Scientist: "These networks make predictions about what neuroscientists will find in a couple of decades, once they are able to decode and ready the synapses in the human brain."

Source: New Scientist, Discovery News, Gizmodo