In spite of the advances made towards making quantum computers practical, qubit-based systems remain unstable and highly vulnerable to errors, something Google may have taken a major step towards fixing.

Through a newly unveiled quantum chip called Willow, Google engineers have passed a significant milestone in error handling. Specifically, they've been able to keep a single logical qubit stable enough so errors occur maybe once every hour, which is a vast improvement on previous setups that failed every few seconds.

Qubits are the basic building blocks of quantum information. Unlike the bits of classic computing, which can store a 1 or a 0, these qubits can store a 1, a 0, or a superposition of both. The combination is a powerful tool in designing algorithms that can crunch problems that would take a classical computer far too long to solve, if they could manage it at all.

Unfortunately qubits are delicate things, their superpositions prone to entangling with the environment and losing their mathematical properties. While today's systems are robust enough to ensure 99.9 percent reliability, practical systems need the error rate to be closer to one in a trillion.

To counter errors in these fragile qubits, researchers can spread a single logical qubits across a number of particles in superposition. However, this scaling only works if the extra physical qubits are correcting errors noticeably faster than they're producing them.

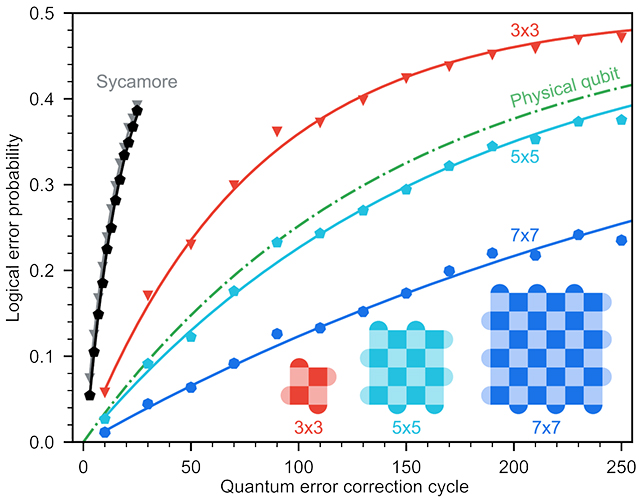

"Willow is the first processor where error-corrected qubits get exponentially better as they get bigger," write Michael Newman and Kevin Satzinger, research scientists from the Google Quantum AI team.

"Each time we increase our encoded qubits from a 3×3 to a 5×5 to a 7×7 lattice of physical qubits, the encoded error rate is suppressed by a factor of two."

Willow has 105 physical qubits, and a combination of its architecture and the error-correcting algorithms it uses have led to its success in terms of stability – where more qubits mean fewer errors.

This has been a problem since quantum error correction techniques were first introduced in the mid-1990s. While there's still a long road ahead to fully realized quantum computing, large-scale quantum operations may at least be feasible following this approach.

"This demonstrates the exponential error suppression promised by quantum error correction, a nearly 30-year-old goal for quantum computing and the key element to unlocking large-scale quantum applications," write Newman and Satzinger.

Stability isn't the only benefit of Willow: Google says it's able to complete a specific quantum task in five minutes that would take one of our fastest supercomputers 10 septillion years (it's a task created specifically for quantum computers, but it still shows what's possible).

Errors are always going to exist in quantum systems, but what researchers are aiming to do is make them infrequent enough for quantum processing to be practical. That will require better hardware, more qubits, and upgraded algorithms.

"Quantum error correction looks like it's working now, but there's a big gap between the one-in-a-thousand error rates of today and the one-in-a-trillion error rates needed tomorrow," write Newman and Satzinger.

An unedited preview version of the research has been published in Nature.