The most fundamental system we have to quantify the importance of scientific research is broken at its core, a new study reveals – and all it took was a single punctuation mark.

In a bizarre new finding, researchers have demonstrated that academic papers with hyphens in their titles get counted less in citation-counting databases: a freakish phenomenon that warps the frameworks we use to estimate the impact of published academic work.

"Our results question the common belief by the academia, governments, and funding bodies that citation counts are a reliable measure of the contributions and significance of papers," says computer scientist T.H. Tse from the University of Hong Kong.

"In fact, they can be distorted simply by the presence of hyphens in article titles, which has no bearing on the quality of research."

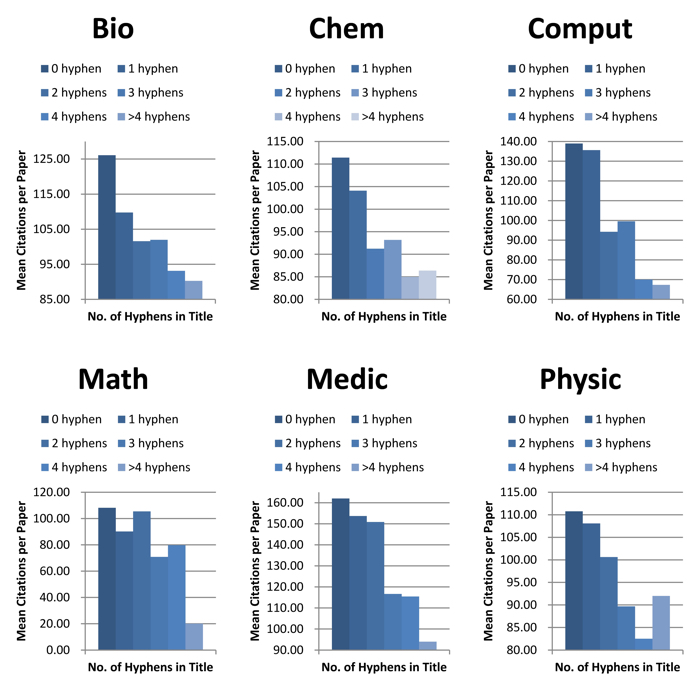

Citations decreasing as more hyphens appear in paper titles (University of Hong Kong)

Citations decreasing as more hyphens appear in paper titles (University of Hong Kong)

Tse and fellow researchers from the University of Wollongong in Australia investigated the world's two leading citation indexing systems: Scopus and Web of Science.

These huge databases are used to gauge how influential individual scientific papers (and their authors) are, along with the merit of the research journals that publish the articles (called the 'journal impact factor').

In actuality, it's kind of impossible to quantify how important any published piece of scientific research is in the real world, but one proxy is seeing how many other scientists cite it as a reference in their own individual work.

There are problems with this system; all kinds of weird problems, research shows.

Nonetheless, it's the system we have in place to tell us what academic research is important and trustworthy – similar to how internet search engines rely on the volume of in-bound links to specific web pages to help define search rankings.

But Tse's new discovery shows this entire scientific ranking system is distorted by one weird bug: when researchers use punctuation in the headlines of their articles.

Regardless of the quality of academic papers – and how much they are intended to be cited – if they include hyphens in their headlines, they end up getting counted less by the indexing systems.

"We report a surprising finding that the inclusion of hyphens in paper titles impedes citation counts, and that this is a result of the lack of robustness of the citation database systems in handling hyphenated paper titles," the authors write in their study (which astutely includes a colon, not a hyphen, in its own headline).

"Our results are valid for the entire literature as well as for individual fields such as chemistry."

Robustness in this case refers to software robustness: the ability of computer systems to be able to deal with erroneous input or unexpected situations.

To check the robustness of Scopus and Web of Science, the team used a technique called metamorphic testing to sniff out robustness defects.

In terms of the erroneous input or unexpected situations the technique was looking for, they're actually quite simple things: for example, when researchers make typos when referencing other papers, such as spelling the author's name wrong, or perhaps leaving a single hyphen out of the referenced headline in a footnote.

According to the researchers, those small inconsistencies are all it takes for the Scopus and Web of Science indexes to fail to correctly link a citation to the article intended to be cited.

"A plausible reason for the erroneous inputs is that when authors cite a paper with hyphens in the title, they may overlook some of the hyphens," the team explains.

"As a result, citation databases may not be able to match it with the original paper and, hence, the original's citation count is not increased."

Further, the problem becomes compounded by titles with multiple hyphens, which introduce multiple chances for human error (in missing one of the hyphens), and therefore increase the likelihood of failed citation links.

On a related note, a 2015 study that analysed citation patterns famously found that papers with short titles receive more citations per paper.

That finding was reported by a number of media outlets, but the new research indicates that title length was a red herring.

Tse's team says the 2015 finding masked the fact that longer titles include more hyphens on average – which is the real dominating factor for why papers with longer headlines receive less citations (more hyphen-related errors in the text, as opposed to more text generally).

When the team drilled down to discipline level – looking at papers in maths, medicine, physics, chemistry, etc. – they found the same phenomenon at work.

They also discovered that hyphens in paper titles have a negative impact on journal impact factors (JIF), with higher JIF-ranked journals publishing a lower percentage of papers with hyphenated titles (based on a sample of 106 journals in the field of software engineering, used as a test case).

There's a lot more work to be done before we understand everything this new research signifies and challenges – but it's clear the ways we've been trying to rate and rank science is more flawed than we knew, and big things will have to change if we want our appraisals to get better from here.

"As a consequence of this study, we question the reliability of citation statistics and journal impact factors," the authors explain, "because the number of hyphens in paper titles should have no bearing on the actual quality of the respective articles and journals."

The findings are reported in IEEE Transactions on Software Engineering.

Update (13 Jun 2019): Since this article was written, representatives from Clarivate Analytics (the company that owns and operates Web of Science) contacted ScienceAlert in response to this study, and to its finding that the inclusion of hyphens in paper titles impedes citation counts.

"[W]e use both linked and unlinked citations to calculate the Journal Impact Factor," the statement reads.

"We do not rely on article title to unify 'citation to source' records in the Web of Science, as this field can be ambiguous and prone to error, so hyphens alone in a cited reference article title will not prevent a cited reference from linking to a source… Therefore hyphens in the article title have no impact on citation counts within the Journal Citation Report, or the Journal Impact Factor."

In response to Clarivate Analytics' critique of the study and its findings, co-author T.H. Tse told ScienceAlert his team's "actual empirical results show that the presence of hyphens in paper titles has a systematic adverse effect on citation counts and journal impact factors".

Tse says the team's paper outlines several citation indexing failures in Web of Science, with screenshots included in the report.

"In software testing, we verify the consistency between the expected results and the actual outcomes. If we detect any discrepancy, a software failure is revealed," Tse said.

"Our method is a black-box testing technique. The internal data structures (such as linked and unlinked citations) or the actual system process (such as the capture and aggregation of citations) are not relevant to black-box testing."

"They may be considered for white-box testing," he added, "but as researchers outside Clarivate, we cannot do it because the source code of the proprietary software is not available. In any case, black-box testing alone served to reveal a number of failures."