One of the most widespread security systems used on the internet to prevent non-humans from accessing websites has been defeated by a powerful new kind of artificial intelligence (AI).

CAPTCHAs are those frustrating online tests that challenge you to identify fuzzy-looking letters and numbers – which automated bots supposedly can't recognise. Only now they can, thanks to a new type of neural network that more closely approximates human perception.

"Biology has put a scaffolding in our brain that is suitable for working with this world," co-founder of AI startup Vicarious, Dileep George, told Digital Trends.

"It makes the brain learn quickly. So we copy those insights from nature and put it in our model."

George and his team developed a computer model they call a Recursive Cortical Network (RCN), which is able to efficiently deduce the grainy symbols depicted in CAPTCHA tests (aka Completely Automated Public Turing test to tell Computers and Humans Apart).

Traditional CAPTCHAs. Credit: Vicarious

Traditional CAPTCHAs. Credit: Vicarious

CAPTCHAs were first introduced in the 1990s to prevent automated software robots from doing things like creating fake user accounts and scraping personal information.

The way traditional CAPTCHAs work is simple. By obfuscating letters and numbers in coloured or fuzzy patterns, it's difficult for automated systems to identify the characters and then enter the right password, whereas the human eye can (usually) easily recognise the displayed text.

One of the reasons it's been difficult for AI systems to get past CAPTCHA barriers is because of the way deep learning neural networks work. These kinds of systems learn by example, developing the ability to recognise letters and numbers by processing thousands of pictures of them.

It's an effective tool for training AI, but according to George, it's susceptible to the kind of distortions and variations introduced by CAPTCHAs, unless you have vast amounts of processing power at your disposal.

"It replicates only some aspects of how human brains work," he told NPR.

"We found that there are assumptions the brain makes about the visual world that the [deep learning] neural networks are not making."

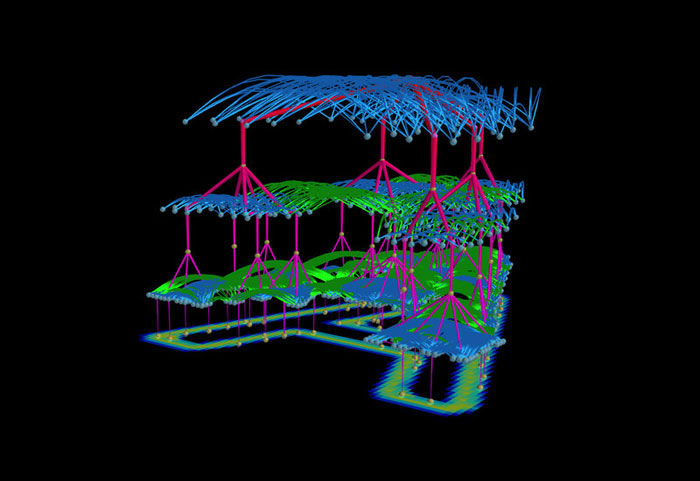

How the RCN sees the letter A. Credit: Vicarious

How the RCN sees the letter A. Credit: Vicarious

It was those kinds of assumptions the researchers tried to instil in their Recursive Cortical Network.

Rather than being trained up on thousands of images or pre-labelled As, Bs, Cs, and so on in order to distinguish the letters of the alphabet, the RCN uses algorithms that enable it to generalise – detecting patterns in contours and surfaces, and able to separate instances of objects even when they overlap.

"During the training phase, it builds internal models of the letters that it is exposed to," George told NPR.

"So if you expose it to As and Bs and different characters, it will build its own internal model of what those characters are supposed to look like. So it would say, these are the contours of the letter, this is the interior of the letter, this is the background."

In their tests, that approach enabled the RCN to be 300 times more data-efficient at cracking CAPTCHAs than deep-learning models, achieving up to 90 percent accuracy in character recognition.

The first iteration of the RCN managed to beat CAPTCHAs back in 2013.

Since then, the researchers have been refining the technology, and held off on explaining how the system works – which is now outlined in a new paper published last week – partly because CAPTCHAs were still in common usage.

As the internet has evolved, standard text-based CAPTCHAs have become less popular, with companies like Google introducing other kinds of more visuals-based tests to block automated bots.

That said, now that Vicarious's methods have been described, experts say it's only a matter of time before the traditional CAPTCHA meets its maker.

"We're not seeing attacks on CAPTCHA at the moment, but within three or four months, whatever the researchers have developed will become mainstream, so CAPTCHA's days are numbered," security researcher Simon Edwards from tech security firm Trend Micro told the BBC.

Not that beating or killing CAPTCHA was ever the researchers' end game.

The point of their RCN, ultimately, is to develop AI systems that more closely resemble what we understand about neuroscience, which could one day give machines the ability to do things like imagine, and make use of what we call 'common sense'.

"Robots need to understand the world around them and be able to reason with objects and manipulate objects," George told NPR.

"So those are cases where requiring less training examples and being able to deal with the world in a very flexible way and being able to reason on the fly is very important, and those are the areas that we're applying it to."

The findings are reported in Science.