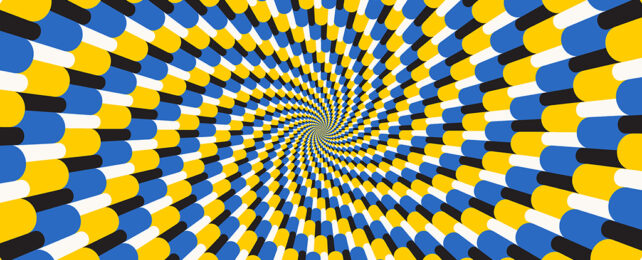

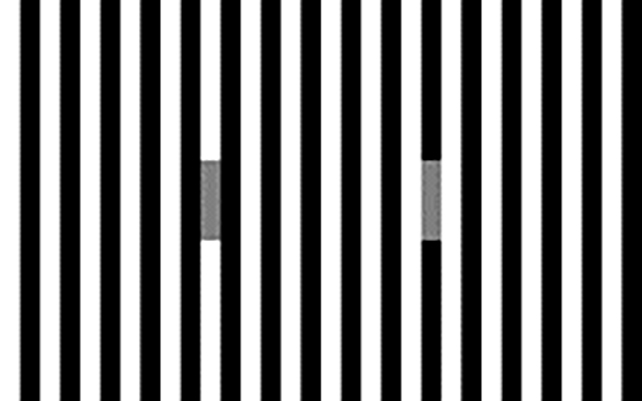

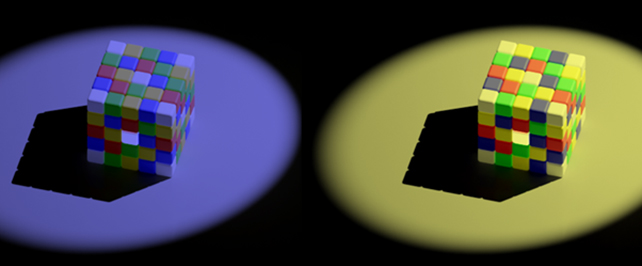

Printed circles that appear to move. Colors that seem different in spite of being identical. Everybody loves a good optical illusion, though precisely where the magic happens is still a mystery in many cases.

A new study by University of Exeter visual ecologist Jolyon Troscianko, and neuroscientist Daniel Osorio from the University of Sussex in the UK has weighed in on the debate over whether certain errors in sensing color, shade, and shape occur as a result of the eye's function or the brain's neurological wiring.

The pair found specific classes of illusion can be explained by limitations of our visual neurons – cells that process information coming in from the eyes – rather than higher-level processing.

These neurons only have a finite bandwidth, and the study authors developed a model showing how this can affect our perception of patterns on different scales, building on previous work analyzing how color ranges are perceived in animals.

"Our eyes send messages to the brain by making neurons fire faster or slower," says Troscianko.

"However, there's a limit to how quickly they can fire, and previous research hasn't considered how the limit might affect the ways we see color."

The new model suggests limits in processing and metabolic energy force neurons to compress visual data coming in through our eyes. This is less noticeable in the mess of natural scenery, but has a bigger impact on how we perceive simpler patterns.

It's the same when digital images get compressed: with a photo of a real-world scene, the compression artifacts are more difficult to spot, because the pixels are more jumbled up and varied. In a digital illustration, where lines and borders are fixed and distinct, those compression artifacts tend to stand out.

The findings could help us understand why we perceive contrasts in modern televisions with built-in HDR (High Dynamic Range). In theory, our eyes shouldn't be capable of detecting the incredible level of contrast between the lightest white and the darkest black presented using this technology.

The researchers posit that neurons have evolved to be as efficient as possible: some are configured to notice very tiny differences in shades, while others are set up to be less sensitive to small differences but much better at detecting large ranges of contrast, which is why the latest HDR TVs look more impressive.

"Our model shows how neurons with such limited contrast bandwidth can combine their signals to allow us to see these enormous contrasts, but the information is compressed – resulting in visual illusions," says Troscianko.

"The model shows how our neurons are precisely evolved to use every bit of capacity."

This applies to the many illusions that emerge out of differences in contrast. Much of our color perception relies on context in these scenarios, and the new model shows exactly which part of our visual processing system is responsible.

The computational model was tested and found to hold true for the human perception of various optical illusions, responses recorded in the retinas of primates, and more than 50 examples of brightness and color phenomena.

Previously, it was thought that other factors – our existing knowledge of shapes and objects, for example, or eye movements – might be responsible for optical illusions fooling us so completely. It seems like those earlier ideas might need a rethink.

"This throws into the air a lot of long-held assumptions about how visual illusions work," says Troscianko.

The research has been published in PLOS Computational Biology.