As far as stars go, M-class stars – better known as red dwarfs – sound like they should be fairly benign.

These stars are significantly cooler than our Sun, and as their name suggests, they're also relatively small, in terms of both mass and surface area.

Because of their relatively low temperatures, they burn through their fuel slowly, which means they have very long lifespans.

They're also extremely common: some 70 percent of the stars in the Milky Way are estimated to fall into the M class.

The combination of their stability and abundance, along with the relatively high chance for rocky planets orbiting red dwarfs to fall into the system's habitable zone, mean that these systems have sometimes been proposed as promising places to search for life.

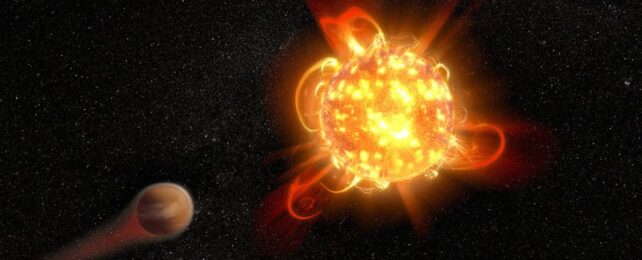

However, red dwarfs do have one unfortunate habit: compared to their larger cousins, they produce an awful lot of stellar flares.

There's been discussion over the years about what this might mean for the habitability of planets in these systems – and in bad news for potential extraterrestrial denizens of red dwarf systems, a new paper published this month suggests these flares could be significantly more dangerous than we thought.

After sifting through a decade's-worth of observations from the now-decommissioned GALEX space telescope, the paper's authors examined data from some 300,000 stars, and focused on 182 flares that originated from M-class systems.

While, as the paper notes, "[previous] large-scale observational studies of stellar flares have primarily been conducted in the optical wavelengths," this study focuses instead on ultraviolet (UV) radiation emitted by these events. In particular, it examines radiation in the near UV (175–275 nm) and the far UV (135–175 nm) ranges.

While it isn't necessarily inimical to the development of the complex molecules that we believe to be a prerequisite for life, radiation of this sort can have dramatic effects on a planet's potential habitability.

The dose makes the poison: in relatively modest quantities, the high energy photons produced by stellar flares might help catalyze the formation of such compounds, but in large enough quantities, these photons could also strip away the atmosphere of such planets, including protective layers of ozone.

The new research suggests that previous studies may well have significantly underestimated the amount of UV radiation produced by stellar flares. As the paper explains, it has been common practice to model the electromagnetic radiation from flares as following a blackbody distribution.

Their temperature is modeled as being around 8,727 degrees Celsius (15,741 Fahrenheit), which represents a significant increase from the surface of their parent stars: the coldest red dwarfs have a surface temperature around 1,727°C (3,140°F), while the hottest can approach 3,227°C (5,840°F).

This new research, however, suggests that stellar flare emissions do not in fact follow such distributions. Of the 182 events examined by the researchers, 98 percent had a UV output exceeding what would have been expected had they followed a conventional blackbody spectrum. As the paper notes, "This suggests that a constant 9,000 K blackbody [spectral energy distribution] is insufficient to account for the levels of [far ultraviolet] emission we observe."

If the stellar flares produced by red dwarfs do indeed churn out disproportionately large amounts of UV radiation, then planets orbiting these stars may well be more hostile to life than we thought, even if they satisfy other criteria for being potentially habitable (such as a surface temperature that would allow water to exist as a liquid).

The study was published in the Monthly Notices of the Royal Astronomical Society.