Artificial intelligence technology is accelerating forward at a blistering pace, and a trio of scientists are calling for more accountability and transparency in AI, before it's too late.

In their paper, the UK-based researchers say existing rules and regulations don't go far enough in limiting what AI can do – and recommend that robots be held to the same standards as the humans who make them.

There are a number of issues, say the researchers from the Alan Turing Institute, that could lead to problems down the line, including the diverse nature of the systems being developed and a lack of transparency about the inner workings of AI.

"Systems can make unfair and discriminatory decisions, replicate or develop biases, and behave in inscrutable and unexpected ways in highly sensitive environments that put human interests and safety at risk," the team reports in their paper.

In other words: how do we know we can trust AI?

Even before we get to the stage of the robots rising up, AI that's unaccountable and impossible to decipher is going to cause issues – from problems working out the cause of an accident between self-driving cars, to understanding the reasons why a bank's computer has turned you down for a loan.

Another issue raised concerns systems that are mostly AI but have some human inputs, which may exclude them from regulation.

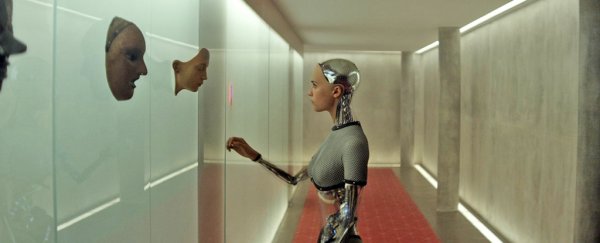

Airport security is one area where more transparency is needed, say researchers. Image: Wachter, Mittelstadt, Floridi

Airport security is one area where more transparency is needed, say researchers. Image: Wachter, Mittelstadt, Floridi

Among the suggestions put forward by the researchers is the idea of having a set of guidelines that covers robotics, AI, and decision-making algorithms as a group, though they admit that these diverse areas are hard to regulate as a whole.

The scientists also acknowledge that adding in extra transparency into AI systems can negatively affect their performance – and competing tech companies might not be too willing to share their various secret sauces.

What's more, with AI now essentially teaching itself in some systems, we may even be beyond the stage where we can explain what's happening.

"The inscrutability and the diversity of AI complicate the legal codification of rights, which, if too broad or narrow, can inadvertently hamper innovation or provide little meaningful protection," the researchers write.

It's a delicate balancing act.

It's not the first time that these three researchers have criticised current AI rules: in January they called for an artificial intelligence watchdog in response to the General Data Protection Regulation drawn up by the EU.

"We are already too dependent on algorithms to give up the right to question their decisions," one of the researchers, Luciano Floridi from the University of Oxford in the UK, told Ian Sample at The Guardian.

Floridi and his colleagues quoted cases from Austria and Germany where they felt people hadn't been given enough information on how AI algorithms had reached their decisions.

Ultimately, AI is going to be a boon for the human race, whether it's helping elderly people keep their independence through self-driving cars, or spotting early signs of disease before doctors can.

Right now, though, experts are scrambling to put safety measures in place that can stop these systems getting out of control or becoming too dominant, and that's where the scientists behind this new paper want to see us putting our efforts.

"Concerns about fairness, transparency, interpretability, and accountability are equivalent, have the same genesis, and must be addressed together, regardless of the mix of hardware, software, and data involved," they write.

The paper has been published in Science Robotics.