A supercomputer at Oak Ridge National Laboratory in the US has just pulled off the largest astrophysical simulation of the Universe accomplished to date.

In November 2024, physicists used 9,000 computing nodes of the Frontier supercomputer to simulate a volume of the expanding Universe measuring more than 31 billion cubic megaparsecs.

The results of the project, known as ExaSky, will help astrophysicists and cosmologists understand the evolution and physics of the Universe, including probes into the mysterious nature of dark matter.

"There are two components in the Universe: dark matter – which as far as we know, only interacts gravitationally – and conventional matter, or atomic matter," explains physicist Salman Habib of Argonne National Laboratory in the US, who led the effort.

"So, if we want to know what the Universe is up to, we need to simulate both of these things: gravity as well as all the other physics including hot gas, and the formation of stars, black holes and galaxies; the astrophysical 'kitchen sink', so to speak. These simulations are what we call cosmological hydrodynamics simulations."

When we peer through space, across billions of light-years, we are also peering through time. As such, we are able to piece together a picture of how the Universe evolved. But the time things take to change on cosmic scales is huge, and we don't get to see those changes take place in real-time.

Simulations are one of the best tools we have to try to understand how the Universe evolved. We can plug in the numbers, speed up time, rewind it, zoom in, zoom out, and overall just play Supreme Being over vast expanses of the cosmos.

That sounds simple, but it's not. Space is huge, and extraordinarily complex. It takes a lot of sophisticated mathematics, and an extremely powerful supercomputer. Even then, there are a lot of things that may need to be left out for the sake of efficiency. Previous simulations, for example, had to leave out many of the variables that make up a hydrodynamic simulation.

"If we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you're talking about looking at huge chunks of time – billions of years of expansion," Habib says. "Until recently, we couldn't even imagine doing such a large simulation like that except in the gravity-only approximation."

It's taken years of refining the algorithms, the math, and the Hardware/Hybrid Accelerated Cosmology Code required to run the ExaSky simulation.

But, with upgrades making Frontier the fastest supercomputer in the world at the time, the team was able to increase the size of their simulation to model the expansion of the Universe.

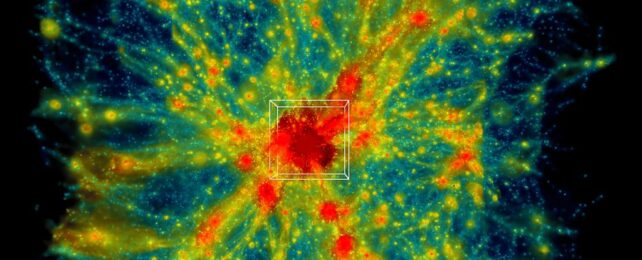

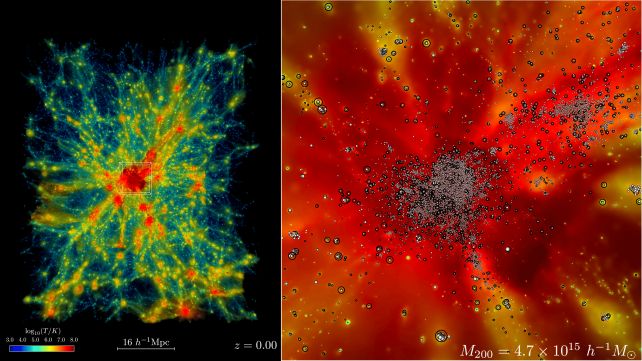

It's going to be a little while before we see any published analyses based on the simulation, but you can enjoy a little teaser. A video released by the team shows a massive cluster of galaxies coming together in a volume of space that measures 64 by 64 by 76 megaparsecs, or 311,296 cubic megaparsecs.

This volume represents just 0.001 percent of the entire volume of the simulation, so we're expecting to see some pretty mind-blowing results in the future.

"It's not only the sheer size of the physical domain, which is necessary to make direct comparison to modern survey observations enabled by exascale computing," says astrophysicist Bronson Messer of the Oak Ridge National Laboratory.

"It's also the added physical realism of including the baryons and all the other dynamic physics that makes this simulation a true tour de force for Frontier."