Having insults hurled our way is never going to do wonders for our self-esteem, but it turns out that disparaging remarks can cut us even when they're delivered by a robot.

You might think that critical comments from robots – droids only saying what they've been programmed to say, with no consciousness or feelings of their own – are the sort of barbs we can easily brush off.

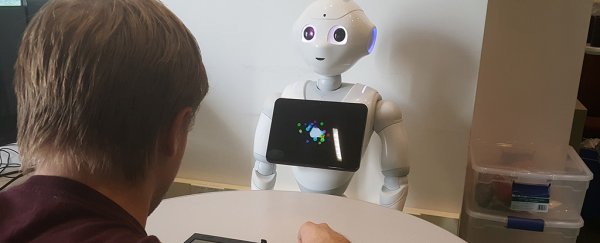

Not so, based on a test involving 40 human participants who had to put up with insults being flung from humanoid robot Pepper during a series of games. Human performance in the game was worse with discouragements from Pepper, and better when the machine was more encouraging.

The work could be useful in teaching us how we might use robots as companions or as learning tools in the future. When the robot uprising comes, it might well start with a few snarky comments.

"This is one of the first studies of human-robot interaction in an environment where they are not cooperating," says computer scientist Fei Fang, from Carnegie Mellon University (CMU).

"We can expect home assistants to be cooperative, but in situations such as online shopping, they may not have the same goals as we do."

The study involved 40 participants, who played a game called Guards and Treasures 35 times over with Pepper. The game is an example of a Stackelberg game, with a defender and an attacker, and intended to teach rationality.

While the participants all improved in terms of their rationality over the course of the test, those who were getting insulted by their robot opponent didn't hit the same level of scores as those who were getting praised. Players met with a critical robot had a more negative view of it too, as you might expect.

Verbal abuse dished out by Pepper included comments like "I have to say you are a terrible player" and "over the course of the game your playing has become confused".

The findings match up with previous research showing that 'trash talk' really can have a negative effect on gameplay – but this time the talk is coming from an automated machine.

Although this was only a small-scale study, as our interactions with robots get more frequent – whether that's via a smart speaker in a home or as a bot designed to improve mental health in a hospital – we need to understand how humans react to these seemingly personable machines.

In situations where robots might think they know better than us, such as getting directions from A to B or buying something in a store, programmers need to know how best to handle those arguments when coding a droid.

Next, the team behind the study wants to look at non-verbal cues given out by robots.

The researchers report that some of the study participants were "technically sophisticated" and fully understood that it was the robot that was putting them off – but were still affected by the programmed responses.

"One participant said, 'I don't like what the robot is saying, but that's the way it was programmed so I can't blame it,'" says computer scientist Aaron Roth, from CMU.

The research has yet to be published in a peer-reviewed journal, but has been presented at the IEEE International Conference on Robot & Human Interactive Communication in India.