Researchers have developed a robot that takes its cues from human brain waves, changing its actions almost instantaneously if a person observes it making a mistake.

Using new algorithms that monitor brain activity for specific signals, the technology could pave the way for a future where humans can control robots almost effortlessly by simply observing their actions.

"Imagine being able to instantaneously tell a robot to do a certain action, without needing to type a command, push a button, or even say a word," says the director of MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), Daniela Rus.

"A streamlined approach like that would improve our abilities to supervise factory robots, driverless cars, and other technologies we haven't even invented yet."

While virtual assistants like Apple's Siri and Amazon's Alexa have liberated us from buttons and levers and made hands-free technology control a reality, the truth is that these systems are still fairly limited.

Not only is their voice recognition patchy at times, but the software behind them is designed to respond only to particular words and phrases, which means users need to learn to speak in certain ways to get the systems' attention.

Existing brain-control interfaces that let people direct robots with their thoughts have transcended some of the issues holding back voice recognition, but users are still obliged to train themselves to think in a very particular way to get machines to do what they want.

That kind of training can take a long time to master, and even when you've got the hang of it, forcing yourself to adapt your thinking to a number of specific control-based thoughts can be mentally exhausting.

To get around this, the CSAIL researchers designed a system based on what's called "error-related potentials" (ErrPs) – brain signals that are generated whenever we notice a mistake, and which are easily produced without requiring any serious concentration.

"As you watch the robot, all you have to do is mentally agree or disagree with what it is doing," says Rus.

"You don't have to train yourself to think in a certain way – the machine adapts to you, and not the other way around."

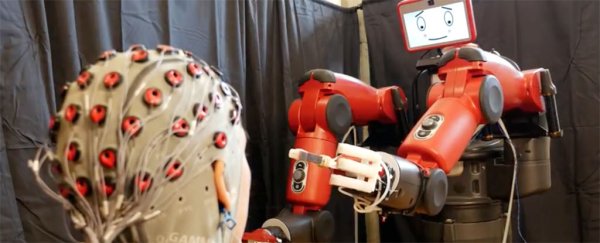

The researchers trialled their system using an industrial robot called Baxter. For the robot to be able to pick up on ErrPs, a human participant needs to wear an electroencephalography (EEG) cap that can monitor brain activity.

In the experiment, Baxter performed simple right/wrong exercises, such as an object-sorting task, placing cans of spray paint in one box, and putting reels of wire in another.

Using new algorithms developed by the team to classify ErrPs, the robot was able to pick up on brain signals from the volunteer in the space of just 10 to 30 milliseconds.

That means if a human noticed Baxter make an error by putting an object into the wrong box, the robot could correct the mistake almost instantaneously.

In testing, the system was able to detect ErrPs in 70 percent of cases where the human participant noticed the robot making a mistake.

The research hasn't yet been peer-reviewed by other scientists, but the team ultimately hopes to improve the accuracy rate to above 90 percent – and have built in a back-up system where the robot can ask the human for a response if it's unsure if has detected an ErrP.

They are also working on a 'universal' version of the system – right now, it needs to be calibrated to each user of the software – and are aiming to fine-tune the sensitivity of the ErrP detection, so that robots could detect a range of nuanced responses from a human observer, not just simply right or wrong.

With enough refinement, the researchers think it's possible that you could even help control self-driving vehicles with such a setup, where passengers' thoughts on the journey get passed along as real-time feedback to an autonomous driving system.

That day might still be a long time away, but it definitely looks like we're edging closer.

"We're taking baby steps towards having machines learn about us, and having them adjust to what we think," Rus told Matt Reynolds at New Scientist.

"In the future it will be wonderful to have humans and robots working together on the terms of the human."

The findings are due to be presented at the IEEE International Conference on Robotics and Automation in Singapore in May.